Tutorial - Compute Shader Filters

|

With DirectX 11 Microsoft has introduced Compute Shaders (also known as Direct Compute), which is based on programmable shaders and takes advantage of the GPU to perform high-speed general purpose computing. The idea is to use a shader, written in HLSL, to make something which is not strictly graphical. Differently from the usual shaders we write, compute shaders provide some form of memory sharing and thread synchronization which helps to improve what we can do with this tool. The execution of the compute shader is not attached to any stage of the graphics pipeline even if it has access to the graphics resources. What we do when we dispatch a compute shader call is spawning a number of GPU threads that run some shader code we wrote.

The main thing that we need to understand about compute shader is that, differently from pixel and vertex shaders, compute shaders are not bound to the input/output data; there is no implicit mapping between the thread that is executing our code and the data processed. Every thread can potentially read from any memory location and write anywhere as well. This is really the main thing about compute shaders. They provide a way to use the GPU as a massive vectorized processor for generic calculations.

I've kept the tutorial simple, self-contained and as plain as possible. I've decided to read the data to process from an image and to output another image. Processing images is really not the only use you can make of compute shaders, but since is simpler to visualize what's going on with an image I've decided to do it this way. Again, since there is no implicit correlation between threads and data, nobody is forcing us to process one pixel per thread; we could process four, five, two hundreds, it doesn't matter. For this tutorial we will go the straightforward way and we will process once pixel per thread, but bear in mind that this is not mandatory in any way.

Let's start.

|

1

|

I've tried to hide a bit all the Window's fluff on windows initialization, thus feel free to skip main.cpp which effectively just creates an instance of DXApplication (simply named application) and call the following methods as shown.

if( FAILED( InitWindow( hInstance, nCmdShow ) ) )

return 0;

if(!application.initialize(g_hWnd, width, height))

return 0;

// Main message loop

MSG msg = {0};

while( WM_QUIT != msg.message )

{

if( PeekMessage( &msg, NULL, 0, 0, PM_REMOVE ) )

{

TranslateMessage( &msg );

DispatchMessage( &msg );

}

else

{

application.render();

}

}

return ( int )msg.wParam;

I won't present either initialize or render because they are very trivial. The former create the DX11 device, loads the textures and so on, while the latter renders a big quad with the two textures applied. The only interesting bit is in initialize where we invoke two methods, createInputBuffer and createOutputBuffer, to create the buffers required for the compute shader to work, and immediatly after it we load and run the compute shader (runComputeShader). We will see these three functions in detail since they are the core of our tutorial.

// ... last lines of DXApplication::initialize()

// We then load a texture as a source of data for our compute shader

if(!loadFullScreenQuad())

return false;

if(!loadTexture( L"data/fiesta.bmp", &m_srcTexture ))

return false;

if(!createInputBuffer())

return false;

if(!createOutputBuffer())

return false;

if(!runComputeShader( L"data/Desaturate.hlsl"))

return false;

return true;

}

Pressing F1 and F2 we switch between the two compute shaders we have. This is done in code calling runComputeShader( L"data/Desaturate.hlsl") and runComputeShader( L"data/Circles.hlsl") respectively on F1 and F2 key up event. The runComputeShader function load the compute shader from the HLSL file and dispatch the thread groups.

Pressing F1 and F2 we switch between the two compute shaders we have. This is done in code calling runComputeShader( L"data/Desaturate.hlsl") and runComputeShader( L"data/Circles.hlsl") respectively on F1 and F2 key up event. The runComputeShader function load the compute shader from the HLSL file and dispatch the thread groups.

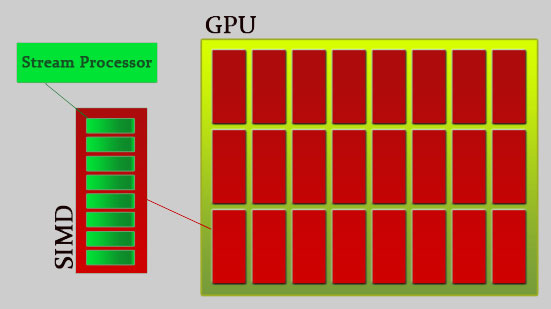

Before proceeding further it would be good to talk a bit about how the GPU works. Differently from the CPU, GPUs are made of several processors called stream processors. Each of these processors can be used to run a thread and execute the code of our shader. Stream processors are packed together into blocks called SIMDs which have their local data share, cache, texture cache and fetch/decode unit.

This is actually a simplification, older video cards like the GeForce 6600 used to have vertex, fragment and composite units for example (and they where not able to run compute shaders!). Anyway, for the sake of our tutorial we will simplify the view of the GPU as proposed.

Image 1 - Simplificated view of the GPU architecture.

On a GPU we have several SIMDs (e.g. on the ATI HD6970 we have 24 units), and each one can be used to run a group of threads. Without going too much into details, what we need to know for writing our compute shader is that we can create groups of threads that will have some shared memory and will be able to run concurrently. These threads are capable of reading from some buffers and writing anywhere into an output buffer.

So the two main things that set compute shaders apart from pixel shaders are shared memory between threads and the possibility of writing anywhere in the output buffer.

It's important to think about compute shaders in terms of threads, not "pixels" or "vertices". Threads that process data. We could work on 4 pixels for every thread, or we could make physics calculations to move rigid bodies around instead. Compute shaders allow us to use the GPU as a massively powerful vectorized parallel processor!

|

|

2

|

Now we know that we can spawn threads on the GPU and that we have to organize these threads in groups. How does this translate into code? We specify the number of threads we spawn directly inside the shader code. This is done with the following syntax:

[numthreads(X, Y, Z)]

void ComputeShaderEntryPoint( /* compute shader parameters */ )

{

// ... Compue shader code

}

Where X, Y and Z represent the group's size per axis. This means that if we specify X = 8, Y = 8 and Z = 1 we get 8*8*1 = 64 threads per group.

But why can't we just specify a single number, say 64, instead? Why do we need to specify a size per axis? Well, effectively the only reason is to have a handy way to access threads that work on matrices (like images). In fact we can get the current thread id from the following system generated values:

uint3 groupID : SV_GroupID

- Index of the group within the dispatch for each dimension

uint3 groupThreadID : SV_GroupThreadID

- Index of the thread within the group for each dimension

uint groupIndex : SV_GroupIndex

- A sequential index within the group that starts from 0 top left back and goes on to bottom right front

uint3 dispatchThreadID : SV_DispatchThreadID

- Global thread index within the whole dispatch

We need these values to discriminate what data to access with which thread. Let's see, for instance, how we write a simple desaturation compute shader.

[numthreads(32, 16, 1)]

void CSMain( uint3 dispatchThreadID : SV_DispatchThreadID )

{

float3 pixel = readPixel(dispatchThreadID.x, dispatchThreadID.y);

pixel.rgb = pixel.r * 0.3 + pixel.g * 0.59 + pixel.b * 0.11;

writeToPixel(dispatchThreadID.x, dispatchThreadID.y, pixel);

}

So this compute shader has groups of 32 * 16 * 1 threads (for instance the maximum number of threads per group is 768 with Maximum Z = 1 in cs_4_x and 1024 with Maximum Z = 64 in cs_5_0 ). The only function's param we have is the global thread ID, which happens to be, since we operate on an image, the pixel's X and Y. The functions readPixel and writePixel are shown further down the page when we will talk about buffers, here we want to focus just on the logic. We call readPixel which will read from the image we have provided to the shader the corresponding pixel. We then desaturate the pixel and save the result into the variable pixel itself. We than take advantage of the function writePixel to output our new value to the output image.

Fairly simple isn't it?

Now, what is the C++ code that starts our groups? Let's have a look:

/**

* Run a compute shader loaded by file

*/

bool DXApplication::runComputeShader( LPCWSTR shaderFilename )

{

// Some service variables

ID3D11UnorderedAccessView* ppUAViewNULL[1] = { NULL };

ID3D11ShaderResourceView* ppSRVNULL[2] = { NULL, NULL };

// We load and compile the shader. If we fail, we bail out here.

if(!loadComputeShader( shaderFilename, &m_computeShader ))

return false;

// We now set up the shader and run it

m_pImmediateContext->CSSetShader( m_computeShader, NULL, 0 );

m_pImmediateContext->CSSetShaderResources( 0, 1, &m_srcDataGPUBufferView );

m_pImmediateContext->CSSetUnorderedAccessViews( 0, 1, &m_destDataGPUBufferView,

NULL );

m_pImmediateContext->Dispatch( 32, 21, 1 );

m_pImmediateContext->CSSetShader( NULL, NULL, 0 );

m_pImmediateContext->CSSetUnorderedAccessViews( 0, 1, ppUAViewNULL, NULL );

m_pImmediateContext->CSSetShaderResources( 0, 2, ppSRVNULL );

...

There is nothing complicated here, but the code deserves some comments. Ignoring the service variables that we use to clear up input and output buffers, the first interesting line is the one that loads the shader. Since we run the shader every time we hit either F1 or F2, we reload it and recompile it every time.

Once the shader is loaded we run it. The method CSSetShader sets our compute shader in place. CSSetShaderResources and CSSetUnorderedAccessViews are used to set our input and output buffers. We'll see these in more detail in section 3; what is important here is that m_srcDataGPUBufferView is an input buffer for the compute shader and contains our input image data, while m_destDataGPUBufferView is an output buffer that will contain our output image data.

The most important call here is Dispatch. This is where we say to DX11 to run the shader and it's also where we specify how many groups we create. Since we have decided to run one thread per pixel we need to dispatch enough groups to cover the whole image; the picutre dimensions are 1024x336x1, we spawn 32x16x1 threads for every group therefore we need 32x16x1 groups to perfectly cover the image. With the very last lines we reset the DX11 state.

So this far we have seen how to specify threads and group of threads. This is half of what we need to know about compute shaders. The other half is how to provide the data to the GPU for the compute shader to work.

|

|

3

|

Compute shaders take input data in two flavours: byte address buffers (raw buffers) and structured buffers. We will stick with structured buffers in this tutorial, but as usual it's important to bear in mind what the cache is doing; it's the typical problem of Structures of Arrays vs Arrays Of Structures.

Our structured buffer, as defined into the compute shader, looks like this:

struct Pixel

{

int colour;

};

StructuredBuffer<Pixel> Buffer0 : register(t0);

The structure contains a single element, thus we could have easily used the raw buffer, but we may have wanted to specify r,g,b,a as four floats, in which case it would have been useful to pack them into the structure.

I've decided to use an int to encode the colour just to do the bit-shifting operations inside the shader. Clearly this is not the best use of the GPU, which prefers to play with floats and vectors, but I was interested in using these new operations that come with shaders 4 and 5. Again, this is not the best use of the GPU, we do it just for educational purposes.

Now, to create the structured buffer with DX11 we use the following code:

/**

* Once we have the texture data in RAM we create a GPU buffer to feed the

* compute shader.

*/

bool DXApplication::createInputBuffer()

{

if(m_srcDataGPUBuffer)

m_srcDataGPUBuffer->Release();

m_srcDataGPUBuffer = NULL;

if(m_srcTextureData)

{

// First we create a buffer in GPU memory

D3D11_BUFFER_DESC descGPUBuffer;

ZeroMemory( &descGPUBuffer, sizeof(descGPUBuffer) );

descGPUBuffer.BindFlags = D3D11_BIND_UNORDERED_ACCESS |

D3D11_BIND_SHADER_RESOURCE;

descGPUBuffer.ByteWidth = m_textureDataSize;

descGPUBuffer.MiscFlags = D3D11_RESOURCE_MISC_BUFFER_STRUCTURED;

descGPUBuffer.StructureByteStride = 4; // We assume the data is in the

// RGBA format, 8 bits per chan

D3D11_SUBRESOURCE_DATA InitData;

InitData.pSysMem = m_srcTextureData;

if(FAILED(m_pd3dDevice->CreateBuffer( &descGPUBuffer, &InitData,

&m_srcDataGPUBuffer )))

return false;

// Now we create a view on the resource. DX11 requires you to send the data

// to shaders using a "shader view"

D3D11_BUFFER_DESC descBuf;

ZeroMemory( &descBuf, sizeof(descBuf) );

m_srcDataGPUBuffer->GetDesc( &descBuf );

D3D11_SHADER_RESOURCE_VIEW_DESC descView;

ZeroMemory( &descView, sizeof(descView) );

descView.ViewDimension = D3D11_SRV_DIMENSION_BUFFEREX;

descView.BufferEx.FirstElement = 0;

descView.Format = DXGI_FORMAT_UNKNOWN;

descView.BufferEx.NumElements=descBuf.ByteWidth/descBuf.StructureByteStride;

if(FAILED(m_pd3dDevice->CreateShaderResourceView( m_srcDataGPUBuffer,

&descView, &m_srcDataGPUBufferView )))

return false;

return true;

}

else

return false;

}

This function assumes that we have already loaded the image from disc and saved all the pixels into m_srcTextureData.

What we do is just creating an unordered access resource that can be bound to the shader. We also specify the structured buffer flag and provide the stride between two elements (which is 4 bytes, one per channel).

If the buffers is created without problems we also create a shader view, which is the DX11 way to provide data to the shaders.

In a very similar way we create the output buffer:

/**

* We know the compute shader will output on a buffer which is

* as big as the texture. Therefore we need to create a

* GPU buffer and an unordered resource view.

*/

bool DXApplication::createOutputBuffer()

{

// The compute shader will need to output to some buffer so here

// we create a GPU buffer for that.

D3D11_BUFFER_DESC descGPUBuffer;

ZeroMemory( &descGPUBuffer, sizeof(descGPUBuffer) );

descGPUBuffer.BindFlags = D3D11_BIND_UNORDERED_ACCESS |

D3D11_BIND_SHADER_RESOURCE;

descGPUBuffer.ByteWidth = m_textureDataSize;

descGPUBuffer.MiscFlags = D3D11_RESOURCE_MISC_BUFFER_STRUCTURED;

descGPUBuffer.StructureByteStride = 4; // We assume the output data is

// in the RGBA format, 8 bits per channel

if(FAILED(m_pd3dDevice->CreateBuffer( &descGPUBuffer, NULL,

&m_destDataGPUBuffer )))

return false;

// The view we need for the output is an unordered access view.

// This is to allow the compute shader to write anywhere in the buffer.

D3D11_BUFFER_DESC descBuf;

ZeroMemory( &descBuf, sizeof(descBuf) );

m_destDataGPUBuffer->GetDesc( &descBuf );

D3D11_UNORDERED_ACCESS_VIEW_DESC descView;

ZeroMemory( &descView, sizeof(descView) );

descView.ViewDimension = D3D11_UAV_DIMENSION_BUFFER;

descView.Buffer.FirstElement = 0;

// Format must be must be DXGI_FORMAT_UNKNOWN, when creating

// a View of a Structured Buffer

descView.Format = DXGI_FORMAT_UNKNOWN;

descView.Buffer.NumElements = descBuf.ByteWidth / descBuf.StructureByteStride;

if(FAILED(m_pd3dDevice->CreateUnorderedAccessView( m_destDataGPUBuffer,

&descView, &m_destDataGPUBufferView )))

return false;

return true;

}

Very very similar apart for the shader view which in this case is an unordere access view.

The last thing worth to show is the full shader for the desaturation effect. Bear in mind that this is everything but optimal, it's written mainly for experimentation and educational purposes!

struct Pixel

{

int colour;

};

StructuredBuffer<Pixel> Buffer0 : register(t0);

RWStructuredBuffer<Pixel> BufferOut : register(u0);

float3 readPixel(int x, int y)

{

float3 output;

uint index = (x + y * 1024);

output.x = (float)(((Buffer0[index].colour ) & 0x000000ff) ) / 255.0f;

output.y = (float)(((Buffer0[index].colour ) & 0x0000ff00) >> 8 ) / 255.0f;

output.z = (float)(((Buffer0[index].colour ) & 0x00ff0000) >> 16) / 255.0f;

return output;

}

void writeToPixel(int x, int y, float3 colour)

{

uint index = (x + y * 1024);

int ired = (int)(clamp(colour.r,0,1) * 255);

int igreen = (int)(clamp(colour.g,0,1) * 255) << 8;

int iblue = (int)(clamp(colour.b,0,1) * 255) << 16;

BufferOut[index].colour = ired + igreen + iblue;

}

[numthreads(32, 16, 1)]

void CSMain( uint3 dispatchThreadID : SV_DispatchThreadID )

{

float3 pixel = readPixel(dispatchThreadID.x, dispatchThreadID.y);

pixel.rgb = pixel.r * 0.3 + pixel.g * 0.59 + pixel.b * 0.11;

writeToPixel(dispatchThreadID.x, dispatchThreadID.y, pixel);

}

The source code provided has a few more functionalities, mainly to read the texture and render the result back on screen. Since this has nothing to do with compute shaders I haven't included it in here, but there are a few comments in code so that you have an idea of what is going on. Still, it's all very simple and linear.

And that's it. I hope you have enjoied the tutorial, and happy hacking with compute shaders!

|

|

|

|